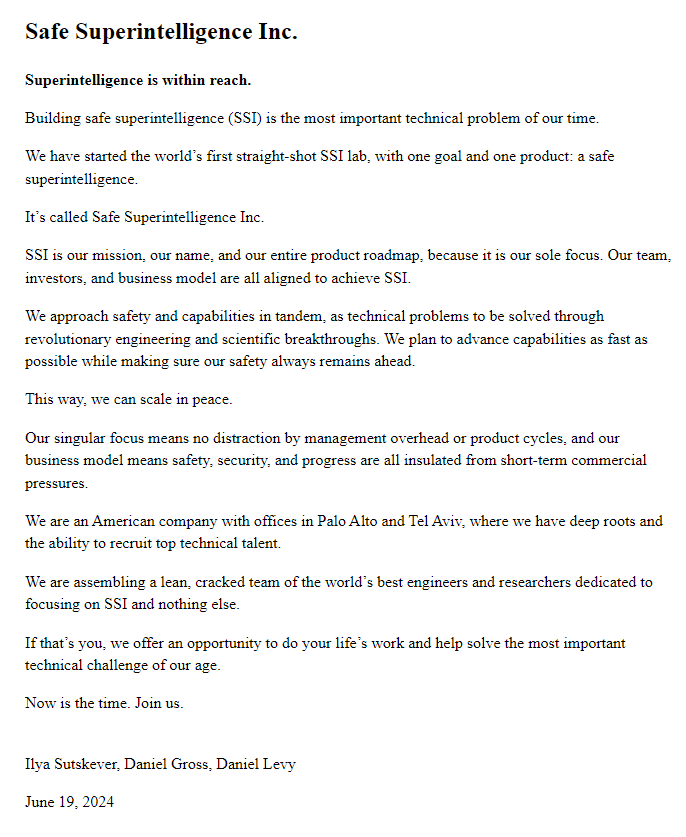

June 19, Ilya Sutskever along with Daniel Gross and Daniel Levy announced that they are starting Safe Superintelligence Inc. What does this mean, and what does it not mean?

What Did Ilya See?

The background to this initiative is partly the firing and re-hiring of Sam Altman as CEO of OpenAI, back in November 2023. There has been a lot of speculation about what really caused the board to fire Sam, some of which became more clear when (then) board member Helen Toner spoke out. But while both Helen Toner and others have said some things explicitly, such as the board not being notified before launching ChatGPT, they also make clear that there are things they cannot talk about. Notably, Ilya Sutskever was a member of the board that fired Sam, and firing him wouldn’t have been possible without his vote.

All the speculations and secrecy around what actually caused the firing led to the meme What Did Ilya See, but the true answer seems to be that a lot of small and medium issues simply accumulated to a majority board no longer trusting Sam Altman to lead OpenAI on the way to AGI.

While a lot of people at OpenAI wanted Sam Altman back, events over the last month or two also show that people working with safety at OpenAI are not satisfied. A number of safety people have left OpenAI, culminating with Ilya Sutskever and Jan Leike leaving in May, claiming that safety has taken a backseat to shiny products. OpenAI’s ambitious Superalignment Initiative announced in July 2023 has been disbanded, though new and less ambitious work has started.

In short: Leading AI safety researchers no longer feel that OpenAI is doing a good job at AI safety.

This Has Happened Before

It is worth noting that OpenAI was founded to create safe artificial intelligence that benefits the whole of humanity, which still is very clear in their mission statement. It managed to gather top talent interested in developing AI and keeping AI risks under control. OpenAI was by no means created as a company that should prioritize shiny products over safety – quite the opposite.

In 2019, OpenAI received a fair bit of critique from the (then quite small) AI safety community, for two reasons. The smaller one was not releasing GPT-2 openly, in contrary to previous commitments – motivated by safety concerns, which in comparison to today’s AI seem rather exaggerated. The larger reason was when Microsoft invested one billion USD and the (capped) for-profit part of OpenAI started.

The for-profit label made some employees at OpenAI fear that shiny products would get priority over safety. This led to Anthropic being founded in 2021 by seven former employees of OpenAI, including Dario Amodei – former Vice President of Research at OpenAI. Anthropic is now one of the leading AI companies, with the LLM and chatbot Claude.

Anthropic has a much better reputation for taking AI safety seriously, which lead to Jan Leike decided to work at Anthropic rather than OpenAI.

Ilya Sutskever, though, seems to think that Claude is a bit too shiny. Instead of going to Anthropic he starts Safe Superintelligence Inc.

Three Questions

Will Ilya and Safe Superintelligence Inc. (SSI) manage to create safe AI for the benefit of the whole of humanity? Apart from all the technical challenges, I think the question can be broken down into these three.

Will SSI Attract Enough Talent?

It is often said that you need three ingredients to build AI: data, compute and algorithms. (I’d argue that energy is about to become a fourth ingredient, but that’s another story.)

Until we have really clever AI, algorithms come from talented people, which is why top AI engineers are fought over by the leading companies. Ilya Sutskever is a big name, and there are plenty of talented AI engineers who care a lot about AI safety. So SSI stands a good chance of attracting the talent they need.

Will SSI Get the Funding They Need?

The second ingredient – compute – is more tricky. To get compute, you need money. On the order of several billion USD.

Raising that kind of money is difficult, and will require some really big investors. After Microsoft semi-acquiring the AI company Inflection, it is becoming more clear that the AI race is a game only for the richest players, and the buy-in can be more than SSI manages to gather.

Will SSI Keep Prioritizing Safety?

The most crucial question, though, is whether Safe Superintelligence Inc. will go the same way as OpenAI. I have no doubt that Ilya Sutskever prioritizes safety over shiny products, and that he will do the same in five, ten and twenty years. But I am far from certain that he will be the one making the calls at the company. To get the billions necessary to train AI – which is necessary if you want to work with technical AI safety – you will most likely need to sell part of the company soul. On top of that, the shinier the products become, the greater the risk of prioritizing them over safety. Even if Ilya would untouchable by such ideas, others at SSI might not be.

The real lesson to take from the firing and re-hiring of Sam Altman is not that OpenAI is nothing without its people, but that a board did not really have the power it was supposed to have – righteously used or not.

Lämna en kommentar